A United Nations panel has warned that brain chip technology being pioneered by Elon Musk could be abused for ‘neurosurveillance’ violating ‘mental privacy,’ or ‘even to implement forms of forced re-education,’ threatening human rights worldwide.

The UN’s agency for science and culture (UNESCO) said neurotechnology like Musk’s Neuralink, if left unregulated, will lead to ‘new possibilities of monitoring and manipulating the human mind through neuroimaging’ and ‘personality-altering’ tech.

UNESCO is now strategizing on a worldwide ‘ethical framework’ to protect humanity from the potential abuses of the technology — which they fear will be accelerated by advances in AI.

‘We are on a path to a world in which algorithms will enable us to decode people’s mental processes,’ said UNESCO’s assistant director-general for social and human sciences, Gabriela Ramos.

The implications are ‘far-reaching and potentially harmful,’ Ramos said, given breakthroughs in neurotechnology that could ‘directly manipulate the brain mechanisms’ in humans, ‘underlying their intentions, emotions and decisions.’

The committee’s warnings come less than two months after the US Food and Drug Administration (FDA) gave Elon Musk’s brain-chip implant company Neuralink federal approval to conduct trials on humans.

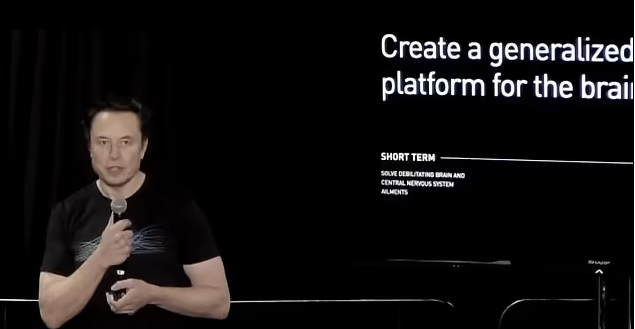

Neurotechnology like Musk’s Neuralink implants will connect the brain to computing power via thread-like electrodes sewn into to certain areas of the brain.

Neuralink’s electrodes will communicate with a chip to read signals produced by special cells in the brain called neurons, which transmit messages to other cells in the body, like our muscles and nerves.

Because neuron signals become directly translated into motor controls, Neuralink could allow humans to could control external technologies, such as computers or smartphones, or lost bodily functions or muscle movements, with their mind.

‘It’s like replacing a piece of the skull with a smartwatch,’ Musk has said.

But those communications pathways, as the UNESCO panel warned, cut both ways.

This May, scientists at the University of Texas at Austin revealed they were able to train an AI to effectively read people’s minds, converting brain data from test subjects taken via functional magnetic resonance imaging (fMRI) into written words.

UNESCO economist Mariagrazia Squicciarini, who specializes in artificial intelligence issues, noted that the capacity for machine learning algorithms to rapidly pull patterns out of complex data, like fMRI brain scans, will accelerate brain chips’ access to the human mind.

‘It’s like putting neurotech on steroids,’ Squicciarini said.

UNESCO convened a 1000-participant conference of its International Bioethics Committee (IBC) in Paris last Thursday and released recommendations Tuesday.

‘The spectacular development of neurotechnology as well as new biotechnologies, nanotechnologies and ICTs makes machines more and more humanoid,’ the IBC said in their report, ‘and people are becoming more connected to machines and AI.’

The IBC weighed numerous dystopian scenarios seemingly out of science fiction last week in their effort to get ahead of rapidly advancing threats to human ‘neurorights’ which have yet to even be codified under international law.

‘It is necessary to anticipate the effects of implementing neurotechnology,’ the UN panel noted. ‘There is a direct connection between freedom of thought, the rule of law and democracy.’

Among the IBC’s myriad of recommendations in its 91-page report, the committee called for wider transparency on neurotech research from industry and academia; as well as the drafting of ‘neurorights’ for inclusion into international human rights law.

The IBC report described the new tech in stark terms as a challenge to ‘some basic aspects of human dignity, such as privacy of mental life or individual agency.’

But UNESCO’s assistant director-general for social and human sciences, Gabriela Ramos, put the converging power of neurotechnology and AI into even starker terms.

Content by: MATTHEW PHELAN